Running Wan2.1 Text-to-Video Model on macOS

I've been experimenting with running the Wan2.1 text-to-video model on macOS, adapting it to work with M1 Pro chips using Metal Performance Shaders (MPS) via Cursor AI.

Adapting Wan2.1 for macOS

The key to making Wan2.1 work on macOS was:

- Using MPS (Metal Performance Shaders) instead of CUDA

- Setting

PYTORCH_ENABLE_MPS_FALLBACK=1for CPU fallback - Adjusting memory usage with

--offload_model Trueand--t5_cpu

Results

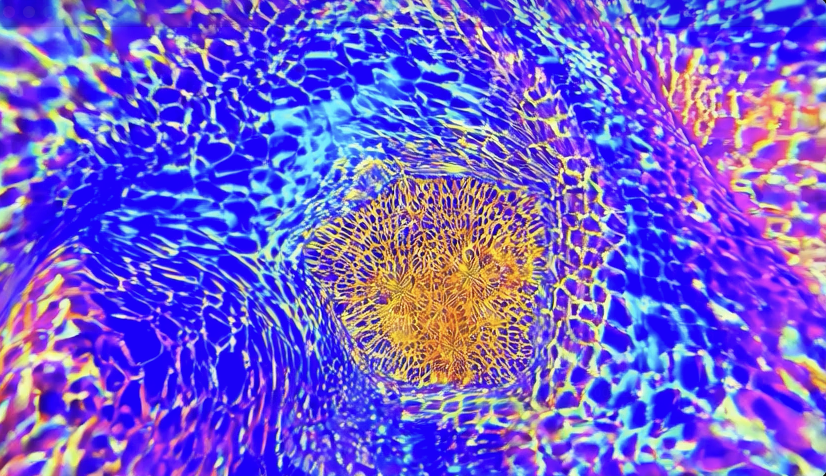

These first samples I generated on a MBP M4 Max 128GB RAM, then concatenated them with ffmpeg. The program was using around 100GB of RAM.

Command to generate multiple short videos. (call generate.py to generate a single video)

export PYTORCH_ENABLE_MPS_FALLBACK=1 <aws:kenny> <region:us-west-2>

python generate_loop.py \

--task t2v-1.3B \

--size "832*480" \

--frame_num 48 \

--sample_steps 15 \

--ckpt_dir ./Wan2.1-T2V-1.3B \

--offload_model True \

--t5_cpu \

--device mps \

--num_videos 15 \

--output_dir loop_output \

--concat \

--concat_output psychedelic_journey.mp4 \

--prompt "A mesmerizing journey through a kaleidoscopic dimension where reality bends and flows. Vibrant colors swirl and morph into impossible geometries, creating a hypnotic dance of light and form. The scene continuously evolves, with each moment revealing new patterns and textures that seem to breathe and pulse with energy. The colors shift between electric blues, neon purples, and molten golds, creating a sense of infinite depth and movement."

Command to concatenate videos.

ffmpeg -y -f concat -safe 0 -i <(for f in loop_output/*.mp4; do echo "file '$PWD/$f'"; done) -c copy output/concatenated.mp4

Another compliation in portrait mode.

Memory Optimization

The 1.3B model works better than the 14B model on Mac hardware. Key findings:

- 32 frames at 480x832 resolution works reliably

- Higher resolutions or frame counts can cause memory issues

Arrived

Arrived

Ninja Turdle

Ninja Turdle